FinOps is not a linear process with a clear start and end, but a continuous practice in which the FinOps team must continually mature its skills to adapt to the changing cloud environment of its organization.

This guide is designed to be used in conjunction with the FinOps Framework to understand your current operating maturity and current areas of attention.

This guide provides a set of concepts and measurements to support an analysis of the current state and planned goals of your overall FinOps practice. This assessment can be done at any level of an organization, from specific service or functional teams, to organizational divisions or entire business units. It is designed to measure operational maturity in consistent and well-understood terms, whether you are measuring one application team or the whole organization.

The goal of a FinOps Framework assessment is not to be a precise analytics grading the FinOps practice, but rather to provide a high-level overview of the current state and to identify targeted areas for the FinOps team to investigate or develop. This assessment does not need to be done for every FinOps Framework Capability at once. Targeting a single capability, or perhaps a set of related capabilities in a single domain may allow for deeper analysis on a per-capability level. This can be especially useful when those capabilities were highlighted by an earlier assessment for further improvement.

For each assessment, be very clear in establishing your:

Include those who will have primary impact on the Scope and Group selected. Consider including those who might be targets for later assessments as well to give them some experience with the process.

We use the concept of a Lens to talk about how you inspect and analyze a FinOps Practice. These lenses are essentially different functional inspection views through which to consider any given Framework Capability, each requiring different effort, and evidence to support.

FinOps Domains represents a sphere of activity or knowledge designed to achieve an outcome for the organization.

FinOps Capabilities represents functional areas of activity in support of their corresponding FinOps Domains. Each Capability includes definitions, key personas, performance metrics, and functional activities involved in a real FinOps practice.

An end-to-end structural view of the entire delivery model of a FinOps Practice, incorporating the Domains, Capabilities, lifecycle, maturity model, and principles of FinOps.

A consistently applied measure of experience, breadth of ability, and success in the delivery of a specific element of the Framework. Maturity is not something to attain for the sake of being mature, but rather something one develops in response to the need to handle more complexity of cloud use or of organizational need when it produces value.

Preamble questions that form the basis of understanding to build a clear picture of the maturity level being set in the assessment.

Within this assessment process, we use the term “Target Score” to describe an intended goal for a particular FinOps practice. If there is a maximum assessment score of 20 within a capability (5 x lenses, each max score of 4) then how perfect do we realistically expect that capability to become at this stage in our journey? How important is that capability? Perhaps a score level of 12 out of 20 is “good enough” for our present roadmap – especially if it is a new muscle (capability) we are exercising.

The lenses provide insight into five key aspects of successfully performing each FinOps Capability. Each will help you identify potential strengths or areas for development in how you perform the Capability, and what steps you can focus on next.

Understanding each of these lenses is key to effectively applying a consistent analysis, which is critical to compare your improvement over time by performing subsequent assessments.

Each lens has five descriptive levels of performance, with examples of what would be expected at each level. Compare these descriptions to how you currently operate, and use the descriptions as a way to understand what more mature organizations need to do when their environments become complex. There is no need to be operating at higher levels in all cases. By reading the next higher level description, you can assess whether your organization would see value in that more advanced operating model. By understanding how you are operating from the perspective of each lens, it will be more clear where you might focus on improving your practices overall.

The KNOWLEDGE lens considers the breadth of understanding and awareness of this capability across the target group. When responding to this lens, consider how clearly this capability is communicated? How widely is this concept, it’s mechanisms terms and processes known?

| Maturity | Heading | Description |

|---|---|---|

| 0 | No Knowledge | At this level, knowledge of the capability as defined by the framework is effectively non-existent within the target group. There are no discussions happening about this capability, and nobody is actively involved in developing any further knowledge. |

| 1 | Partial Knowledge | Preliminary knowledge of the Capability being assessed is now beginning to spread amongst key stakeholders and individuals, but the capability is not yet common knowledge across the target group. |

| 2 | Developing Knowledge | Basic knowledge of the Capability is understood by a limited number of key stakeholders but information is limited in unscaled disbursements. |

| 3 | Full Knowledge | Strong knowledge of the assessed capability is known throughout the target group, incorporated with starter onboarding and periodic reinforcement via training or other appropriate communication methods. |

| 4 | Knowledge Leader | The target group is now driving awareness of this capability beyond itself to other peer groups and the wider industry. Leveraging deep awareness across individuals in the group, working to improve awareness and build new insights into the capability. |

PROCESS relates to both the set of actions being performed in order to deliver the capability being assessed, and the artefact defining and documenting those actions. Consider the efficacy, validity and prevalence of such processes.

| Maturity | Heading | Description |

|---|---|---|

| 0 | No Process | There are no described, consistent processes in place governing any aspect of the assessed capability within the target group. |

| 1 | Implementing Process | Processes to standardise this assessed capability are being formed, defined and possibly tested. Processes are not yet formalised as BAU for the target group. |

| 2 | Developing POC Process | Relevant, functional processes are now in place, agreed upon and being followed by at least some of the teams. |

| 3 | Scaled Process | Scaled processes are now in place, documented and you’ve implemented periodic feedback loops. |

| 4 | Mature (Agile) Process | Clearly defined, transparent, and fully integrated processes are now universally established and consistent. This does not necessarily mean there is one process for each task but that processes in place are all in this state. Processes are being iterated on with key stakeholder feedback and ongoing agile refinement. |

Is this capability measured? Is there a way to measure and prove progress over time? How are those measurements obtained, and how relevant are they?

It is important to note that this lens does not measure how high any metric scores are, but how the measurements themselves are defined and used.

| Maturity | Heading | Description |

|---|---|---|

| 0 | No Metrics | There are no measurements being taken at this point. No direct insight is available concerning the progress or current state of this capability. |

| 1 | Identified Metrics | Identified key metrics the business finds valuable for this capability to make trade-off decisions. |

| 2 | Baselined Metrics | Initial, manually generated metrics are in place providing rudimentary traffic-light (eg: Red/Yellow/Green) measurement of this capability. |

| 3 | Established & collecting Metric Targets data | KPIs are now in place covering at least some of this capability within the target group and cadence has been established for collection |

| 4 | Mature, Global KPIs | S.M.A.R.T. KPIs are now globally in place, automated and refined/iterated on with a regular cadence. Direct links to business goals. Direct link to business performance. |

Consider the knowledge, processes and metrics you are using to govern this capability being used by your target group. How widely has this capability been adopted and accepted by the target group as part of its integral and critical functions? Consider the prevalence and presence of this capability across the entire target group being assessed.

| Maturity | Heading | Description |

|---|---|---|

| 0 | No Adoption | The capability is not in place anywhere within the target group. |

| 1 | Siloed Adoption | Elements of the capability are being adopted by a siloed group, lacking standardization and evidence best practices. |

| 2 | Initial Standardized Adoption | Early elements of the capability are being standardized and vetted by a few individuals within the target group. |

| 3 | Key Adoption | Fully established standardized elements of the capability as a part majority target groups BAU (Business As Usual) behaviors. |

| 4 | Full Adoption | The capability now exists in totality across all elements of the target group, and is being actively engaged with and worked on. |

Why spend valuable time executing a repeatable task with predictable decision points and iterations? Automation drives consistency, speed and scalability across your target group.

| Maturity | Heading | Description |

|---|---|---|

| 0 | No Automation | Virtually all of the required actions within the Capability are being executed manually at this stage. |

| 1 | Identified Automation Opportunities | Starting to identify and map your automation requirements for a solution that will reduce labor and time toil. |

| 2 | Experimental Automation | Early adoption of some key actions are beginning to be optimised through automated workflows and solutions. |

| 3 | Primary Automation | Primary or most demanding actions have now been offloaded to automation solutions. |

| 4 | Full Automation | All material, repeatable tasks within this capability are now automated, any new tasks are reviewed for automation potential or implemented as automation as the capability evolves |

When choosing the scope of an assessment we will define two key parameters:

Establish your target group by focusing on groups that stand out in some way. Again, better to focus on one or a few groups than to try to obtain average scores across many groups who are at vastly different levels of maturity in their practice.

When you are considering a target group and scope, look at some of these attributes to pick a meaningful and useful groupConsider the following attributes as examples of defining scope parameters:

If you intend to repeat assessments over time for trending / baselining purposes, then be sure to use the same Target Scope and Target Group when you reassess to maintain the validity and trust placed in the data by the wider business.

Maintain a record of the scope along with all instances of baseline and analysis of the framework, to ensure consistency and context.

Once the environment scope has been defined, target scope needs to be considered. Trying to analyze all of the (currently 22) capabilities within the FinOps Framework is a tall order.

When defining your target scope, focus on the capability or capabilities in areas where you believe there will be high business value in spending some FinOps team time building out. This will oftentimes be in the Understand Cloud Usage & Cost domain if you are just starting out, or may be in the Optimize Cloud Usage & Cost domain, Quantify Cloud Value, or Manage the FinOps Practice domains if you are a little more established. It is likely better to assess fewer capabilities to keep the assessment more focused than to do too many. Remember, like FinOps itself, we benefit from starting small and growing in scale and complexity once we have established some muscle memory from repetitive practice.

Depending on the nature and strengths of the business, different capabilities will interact and support one another in different ways. Therefore, consider which capabilities are most useful for you to track at this time in your FinOps journey. For example, an organization just adopting a FinOps culture would likely wish to assess foundational Capabilities such as Allocation, Reporting & Analytics, Data Ingestion (particularly if not using a third party FinOps tool), Rate Optimization, Workload Optimization and FinOps Education & Enablement. An organization later in their adoption might choose to focus on Capabilities that allow them to interact more effectively with the organization such as Forecasting, Budgeting, Inovoicing & Chargeback, and Unit Economics.

While conceptually possible to assess all of the framework capabilities, this would take a significant amount of time and work from you and those in your target group. In order to produce a repeatable, meaningful, targeted assessment – you need to ensure a tight focus on the specific areas you are looking to progress at that stage in the FinOps Adoption journey.

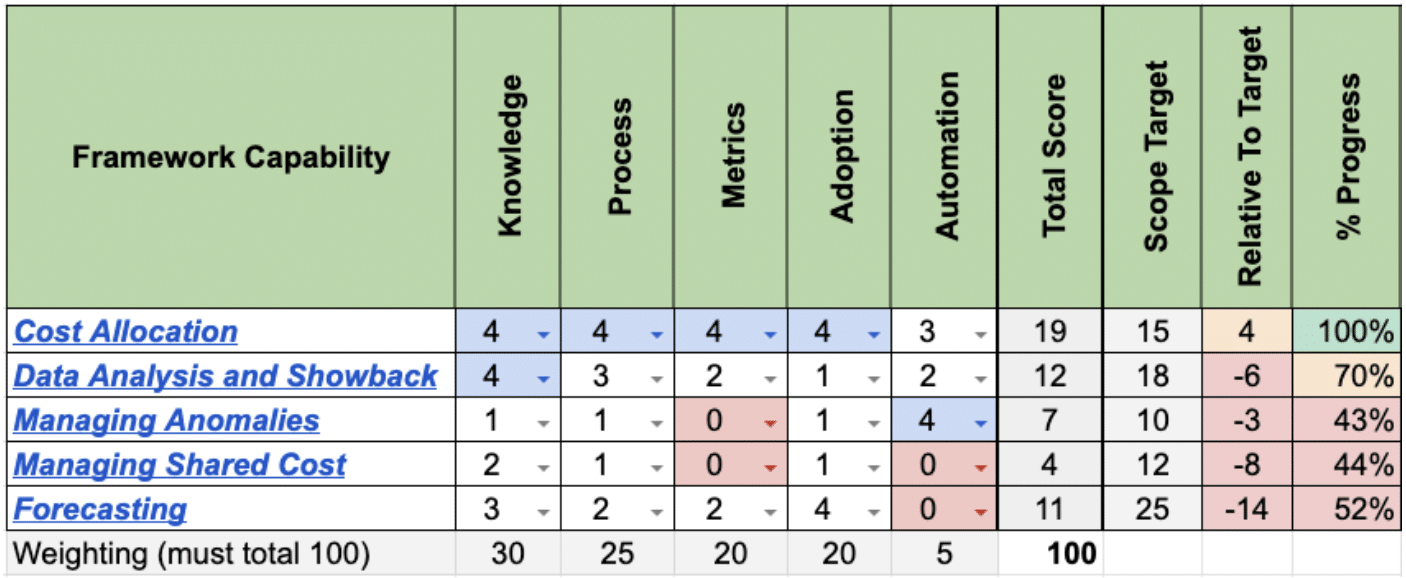

Consider the priorities of Knowledge, Process, Metrics, Adoption and Automation across your Target Scope and Target Group. Bear in mind what is currently a realistic achievement, and what is most important to improve. For example: Is there a minimal level of knowledge and process likely to be in place currently? You might want to consider weighting that higher in your assessment – whilst reducing the impact of the Automation score, which is likely to be low if there is no existing knowledge or process. Think within the context of what you are trying to promote efforts towards.

When selecting your Target Scores, consider the relative levels of success you want to aim for across the various capabilities you have identified. Some capabilities may warrant a high target level of maturity, but others could be brand new disciplines that take time to perfect. What level are you aiming to reach in the foreseeable future? Setting a realistic short-term Target Score goal helps you to measure success against your own level of “done.”

The target scope and target group will help to identify the relevant stakeholders and SMEs required. Remember that different capabilities may well necessitate different stakeholders and adjacent personas, and likely different targeted discussions in order to reach the most accurate answers for analysis. Record the stakeholder details and their evidence / data contributions within the assessment data, ensuring that the context is retained for future baseline analysis. Consider including your stakeholders when setting your Target Scores.

Once the Target Scope, Target Group, Lens Weighting and Target Scores have been defined and Stakeholders / SMEs are identified, you are now fully prepared to execute an iteration of the FinOps Assessment. For each selected capability across the Target scope, you can now plan your conversations. Consider grouping capabilities covered by the same subset of SMEs / stakeholders where applicable.

It is recommended to begin by giving a short overview of the Target Scope, reviewing each capabilities by ensuring that everyone present has an awareness of what that capability is, and where it fits within the overall business. Then confirm the defined scope, and ensure that context has been clearly communicated before beginning. Use this overview as an opportunity to broaden education about FinOps and ensure that all involved parties are at a good level of knowledge about what is being assessed.

Before diving into questions related to the 5 lenses, explore some Discovery Questions. It is recommended to develop and curate a library of capability-specific discovery questions that you will to begin gathering evidence and data to further support a decision on scoring for each lens within the assessment proper. Where possible, gather documented evidence to support answers to these questions, and ensure this evidence is associated with the rest of the assessment information to ensure context and support confidence in an accurate outcome.

For each of the lenses defined above, use the input from the evidence gathering discussions along with overall consideration of the performance of that lens within the context of that capability and scope, to decide upon a suitable maturity score for that lens. Try to be as consistent and fair as possible when scoring, and keep in mind the overall realistic FinOps goals held for that capability within that context.

If there is disagreement among the stakeholders you are interviewing, this is good. It means you have identified an area where there is likely a lack of common understanding, or a lack of common experience, so you have an opportunity to correct these a bit right away. This may also indicate that the people involved in your assessment are involved and focused, another good indicator. Use this opportunity to educate, enable, and align.

Score each capability as a percentage of a potential maximum, for example if your rating scale goes from 0 to 4, you have 5 lenses – giving a total maximum score of 20 for a capability. Each assessed lens can also then be given a weighting, according to business priorities for focus at that stage in the journey. That scoring might look a bit like this, for a subset of 5 capabilities (Note this example is not yet updated to reflect the Framework 2024 updates):

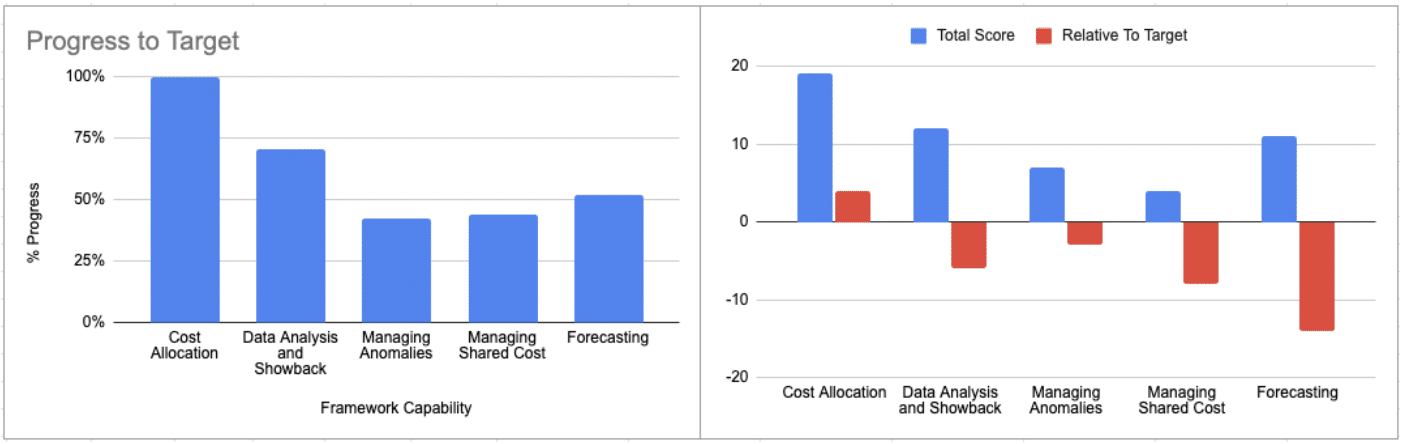

Once you have these metrics, if you have chosen to assess multiple capabilities you can aggregate scores to provide an overall evaluation outcome – as well as highlight areas of low (or zero) scoring, which will contribute to your outcome analysis.

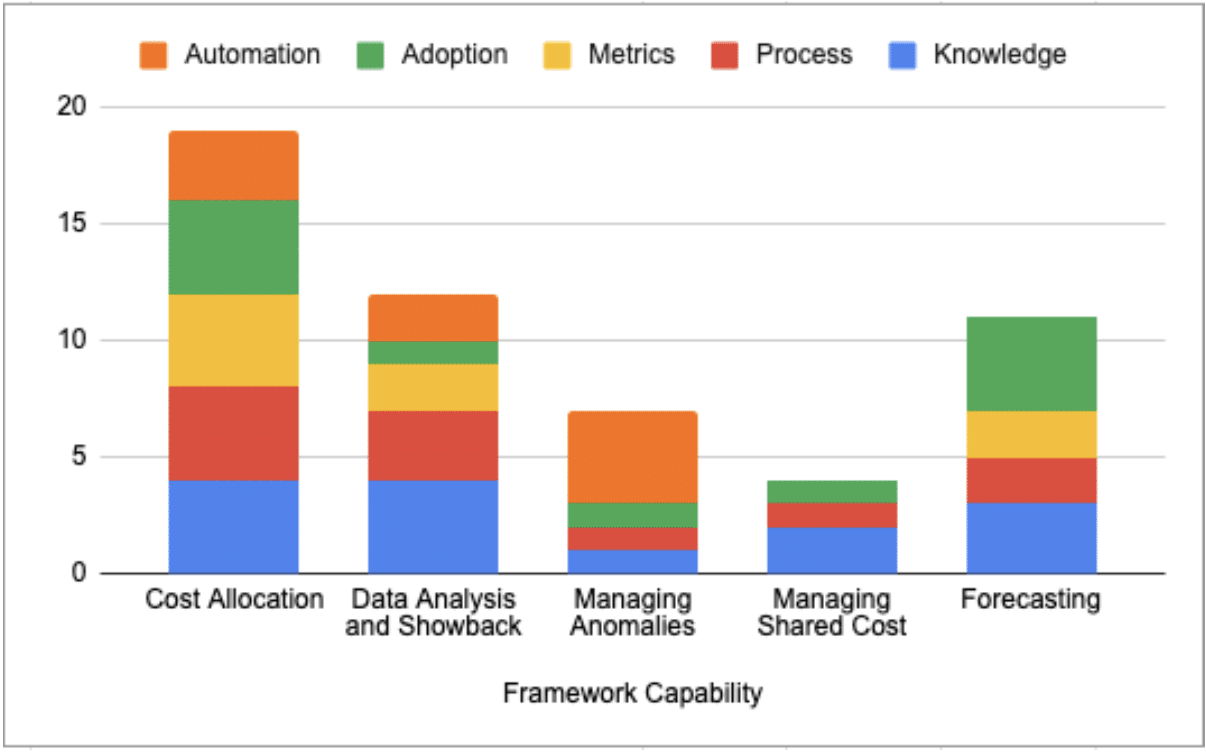

For the imaginary example used in this guide, the 5 assessed capabilities were broken down further into their stacked assessment components to highlight the strengths and weaknesses across the set – and to further highlight which areas had the most improvement needed (and associated effort).

You can see that Knowledge and Process are comparatively strong areas for most of this set of capabilities – with Adoption spreading as well. There are still improvements to be focused on for Metrics, and that Automation has begun in some capabilities. It is clear that a number of other capabilities are yet to begin leveraging tooling to improve workflow efficiency and effectiveness. Previous efforts to drive an improvement in Reporting & Analytics have resulted in a strong overall score for that capability, which provides a good grounding for other capabilities that depend heavily upon it – for example “Anomaly Management” – to be further developed.

From this data, you can now clearly identify areas for improvement and further discussion using the provided evidence to uncover any potential blockers to address – and develop a roadmap to deliver a delta for the next assessment.

If you retain this data and repeat the assessment periodically using the same scope and criteria – you can easily highlight score changes on this grid, and trend improvements over time.

Now that you have analyzed the outcome data, you can then use all the data to guide your decisions to focus deeper into a specific area using the discovery questions you asked in the earlier “Gathering Evidence” section.

Consider how to surface key areas of focus and highlight missing capability components that could unlock success in both that capability and adjacent ones.

In the example given above, let’s look at the “Managing Shared Cost” capability (now a part of the Allocation Capability in Framework 2024). It is immediately clear that Managing Shared Cost has space for further development – particularly in both Metrics and Automation, where there is a zero score right now. Effectively, you have no insights into the progress or success within that area and there is no tooling in place to support the work. The capability is beginning to be understood however, and it is being actioned at some level, and adoption is growing. This might still result in successful delivery of the Capability outcome of actually distributing and managing the shared cost within the cloud platforms…

But are those results being delivered in the most optimal way?

Firstly, you have no idea how effective the shared cost efforts work is, because you have no metrics to prove one way or another. And secondly, because there is no automation you might reasonably assume that the success of that capability rests largely on human effort. Depending on what the business priorities are, that could be viewed as a risk. Building metrics and automation into that capability could increase the effectiveness, whilst freeing time for people to deliver greater value elsewhere.

Consider the Capability Hierarchy as discussed earlier in the “Target Scope” section, and look at which of the current Capabilities within the focus group are going to be heavily leveraged in the next stage of the roadmap. Use this insight, alongside the analysis delivered in stage 3, and the context provided within the Capability Discovery section, to formulate your priorities for your next primary adoption acceleration focus.

The FinOps Foundation extends its gratitude to the hard-working members of the Working Group: